Analyze high-velocity data and take action instantly

Ensure business-critical data is available for analytics and operational use cases with event streaming support from Fivetran.

Signs you may benefit from streaming data

As your organization grows, batch processing may no longer be suitable for all your use cases.

Growing data volumes

Inability to meet SLAs

Miscommunication among employees, customers or vendors

Greater inventory yield variance

Lack of competitive advantage

Use cases that require instant action, i.e. fraud detection

Automated replication for streaming use cases

Quickly and easily replicate data from your event and streaming sources to your destinations with Fivetran fully managed pipelines.

Load data to your cloud destination with our fully-managed event and streaming data source connectors

Send data from Fivetran to your streaming platforms

Collect event data in your cloud storage solution

Gain real-time insights with streaming data movement

Get instant information about financial data for trading, portfolio management, banking and insurance uses cases, and manufacturing and logistics coordination.

Understand your customers with a complete 360-degree view and provide a streamlined, hyper-personalized experience.

Manage resources for optimum efficiency and revenue generation.

Monitor and track data irregularities that can help you identify anomalies or fraud.

Promote a culture of data trust by bringing all data together from different business units.

Build out data applications for your clients with the latest, freshest data.

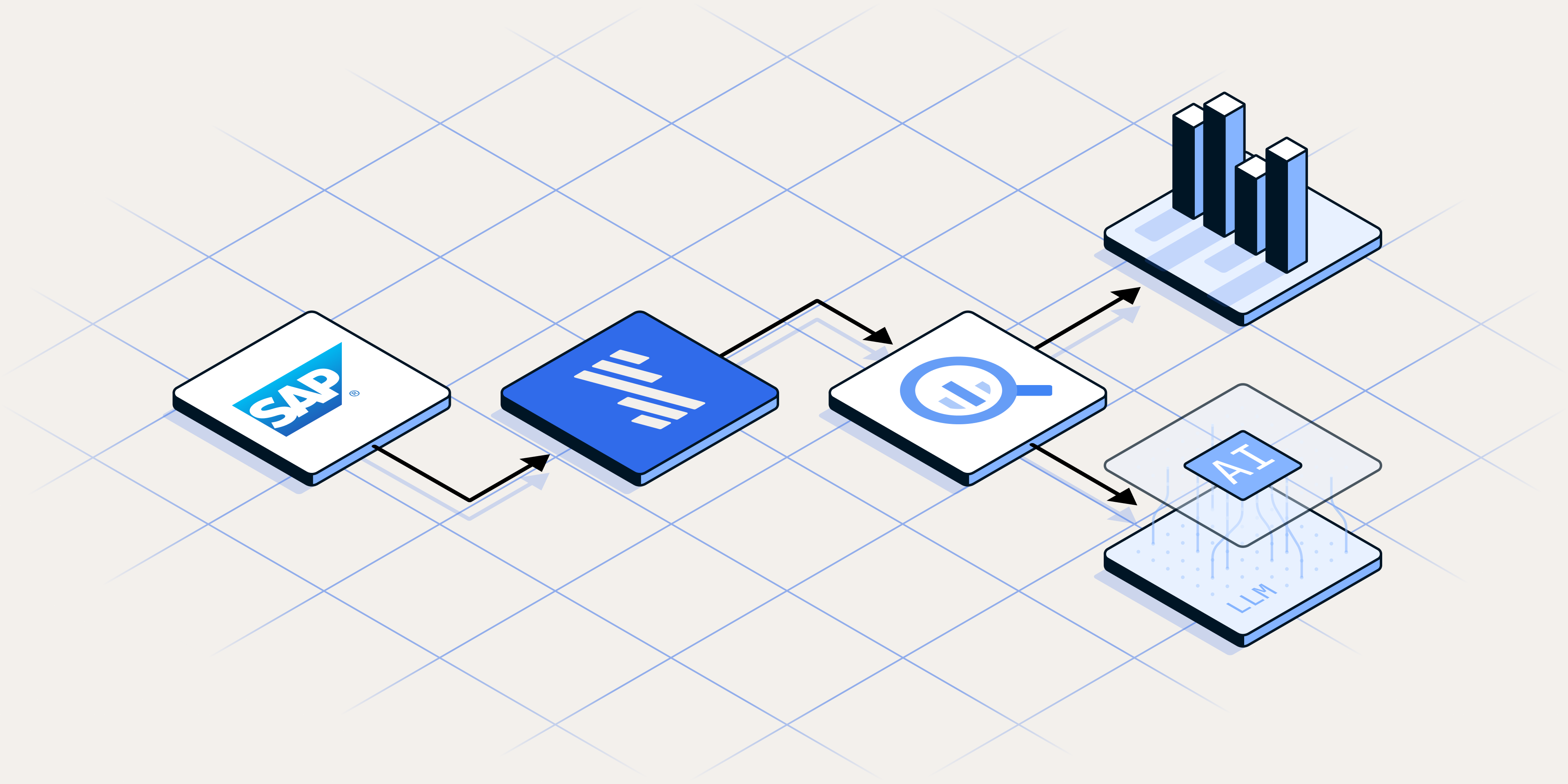

How Fivetran processes your streaming data

Fivetran can load event-based data to your destinations.

Set up a collector (e.g., Webhook) to receive data or connect to a message broker.

Fivetran pulls, normalizes, deduplicates, maps and unpacks (if selected) your data.

Data is written to an internal storage location as a compressed CSV and batch loads it into your destination.

carwow frees up half of data scientists’ time spent on ETL

"We are really happy with the Kafka connector, we have millions of events from Kafka that we sync every day from different tables."

Ioannis Nianios, data engineeer, carwow

%20(1).png)

.svg)

.svg)

.svg)